Computer Vision and Applications

By: Ciopages Staff Writer

Updated on: Feb 25, 2023

In his novel ‘I, Robot,’ science fiction writer Isaac Asimov predicted that robots and artificial intelligence (AI) would be banned from the Earth in the year 2030. With a decade left to go, his prediction doesn’t look like it could be much further from the truth.

AI has become mainstream of everyday life. An increasingly vast amount of data that is forecast to grow 61% to 175 zettabytes by 2025 is helping us make sense of even the most complex of processes. Algorithms driven by AI have become standard, with Amazon, Facebook, Netflix, and Google, amongst others, leading the way with personalization and recommendation engines. According to Netflix, 75% of what users watch comes from AI-generated suggestions.

The majority of AI solutions on the market today use machine learning, an application of AI, which predicts future actions from past experiences (data). However, the inherent problem with the technique is that while solutions using data can make accurate decisions from data, often more effectively than humans, a machine does not understand why.

One field that is seeing significant growth in use cases and investment is known as computer vision. It can solve the issues associated with machine learning models, enabling computers to process the world in the same way that humans do. Advances in deep learning frameworks are now allowing great leaps in computer vision, and it is already able to surpass human counterparts in some tasks.

In this article, we will look at what computer vision is, how it works, and some of the most compelling use cases for this powerful application of AI.

What is Computer Vision (CV)?

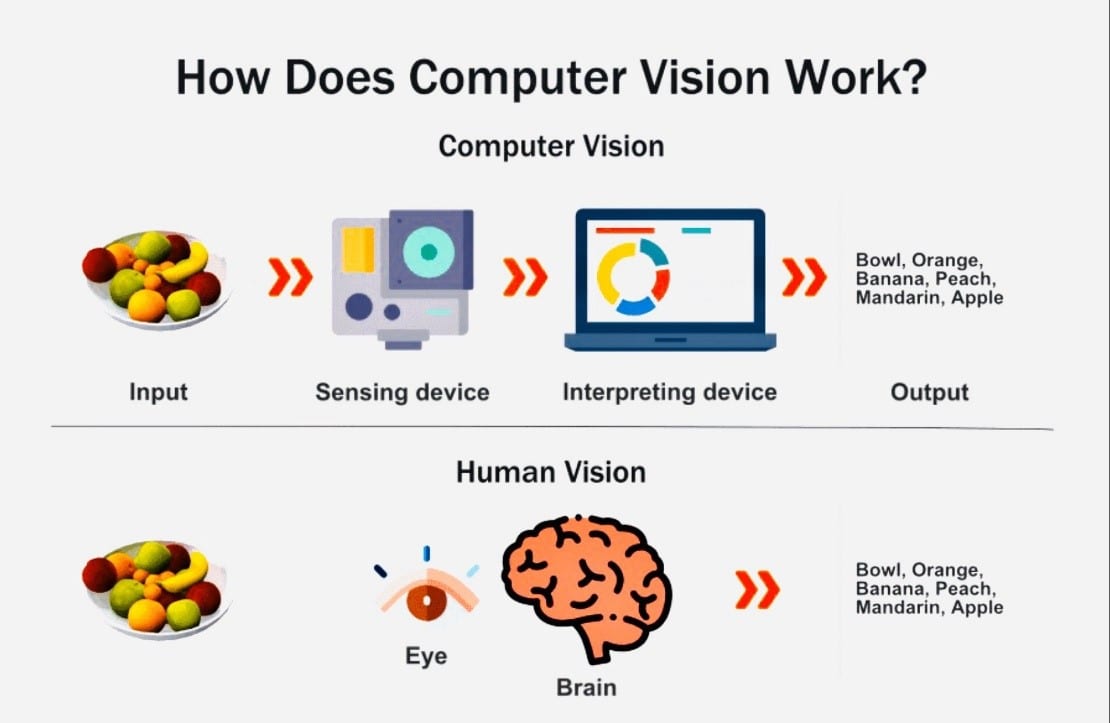

Computer vision is a field of study that looks to develop techniques that allow computers to see and understand the content of digital images like photographs or videos. CV goes beyond just putting a camera on your computer or laptop. The objective is to help machines view the world like people or animals do, which is no small feat. CV helps machines understand the things they see. A camera on a laptop knows an image is in front of it, but would not be able to define that as a human being. Arguably the most famous use case of computer vision is one that we’ve all taken for granted. Tiny black and white strips, which we know as barcodes, have been around for years to assist humans with product scanning.

Computer vision is like the part of the human brain that processes what an image means with the camera being the part that sees the image, like our eyes.

CV has been able to evolve at pace in the last decade, thanks to improvements in expertise, technology, and computing power. Historically, machine learning engineers could build software that predicts cancer survival rates better than humans. However, to do so took potentially dozen of data science and clinical experts working as a team.

Modern-day deep learning methods rely on neural networks that take those original concepts to a new level. When loading a neural network with labeled examples of data, it can quickly extract patterns into mathematical equations, classifying future pieces of information. For instance, to recognize a face, the deep learning framework just needs lots of images and not any steps in-between, as was the case a decade or so ago.

How Computer Vision Works

CV is about recognizing patterns. In the same way that traditional machine learning methods analyze strings of data, CV will ingest all the attributes of an image or video and find things that match what it already knows. A machine using CV could use several techniques to get the information it needs.

One of the most common applications of CV is the need to understand static images like a photograph. You have probably seen how Facebook can recognize the faces of people in a picture for some time time. In May 2020, the social media giant announced a new AI tool that can automatically identify items that users put up for sale in the marketplace. Head of Applied Computer Vision at Facebook, Manohar Paluri, says that “we want to make anything and everything on the platform shoppable, whenever the experience feels right.”

The word “experience” is essential here. Computer vision changes the way that we experience things, as well as just being able to automate them.

Computer Vision Techniques

There are many techniques for deploying computer vision.

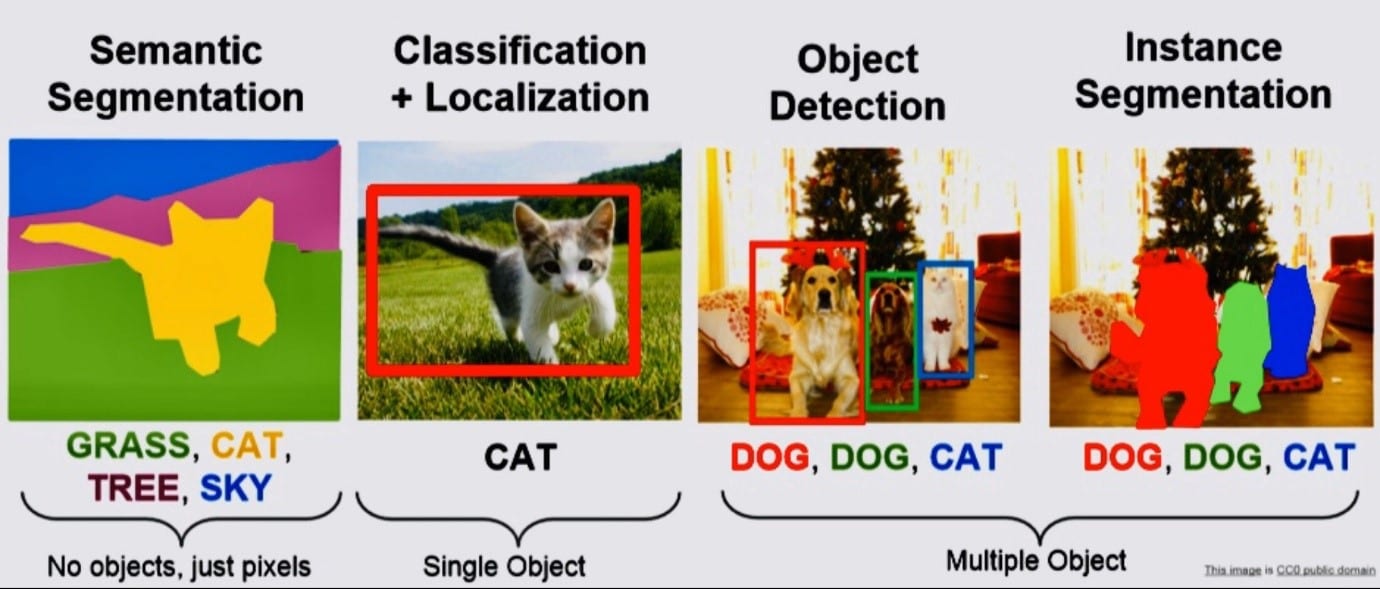

- Object Classification: Works out the broad category that a photograph falls into.

- We are matching the images to pre-existing labels from training data. For example, can the machine see an animal in the photograph?

- Object Identification: What type of object is in the image following the classification?

- If we know the image is an animal, is it a cat or a dog?

- Object Verification: Check whether an object exists in a photograph

- If we are looking for pictures of a cat, does it exist in the photograph?

- Object Detection: Find where the objects are in a photograph

- This technique works out the boundaries of the image and is often known as edge detection.

- Object Landmark Detection: Work out the key features or landmarks that exist within the object

- We are using shapes, colors, and visual indicators to better understand how an image or video is working.

- Object (Instance) Segmentation: What pixels belong to the object in the image?

- Boundaries of objects at each pixel level, unlike classification which assigns a class to the entire image.

- Object Recognition: What are the objects, and where are they in the image?

- Ascertains that there is an image and where all the individual components are situated.

Applications of computer vision will typically utilize one of these methods. However, more sophisticated cases, such as autonomous vehicles, will rely on several different ways to accomplish their goals. The image below gives an example of some of these in practice, using cats and dogs.

Source: https://medium.com/@neha49712/computer-vision-the-most-demanding-area-64f23595a75d

Machines tend to struggle when they see an image in a state that they do not expect. A photograph of an everyday object that is obvious and apparent for a human to recognize can be a challenge for the computer. An example of CV in practice is the viral app, “Not Hotdog.” In its own right, the functional purpose of the app is mostly unimpressive, but you must consider the types of neural networks operating in the background. Once you think about precisely what is happening behind the scenes, “Not Hotdog” starts to border on being quite an amazing feat of AI.

The app determines whether an image is a hotdog or not. Mostly, it is doing a task that a young child would be able to complete on the majority of occasions. The challenge comes when the hotdog is in different states. For example, how does the machine recognize a hotdog both in and out of a bun? Similarly, what if the object inside of the bun is a banana instead of a sausage? A machine must be intelligent enough to understand if the specific object it is looking for is present within the image. Not Hotdog is brilliant, whereby it can ascertain the difference between types of sausage, such as a hotdog and a bratwurst. Machines need to be at this level on a grand scale for computer vision to be a success.

Source: Engadget

A machine will need to ingest millions of images for computer vision to be accurate enough for real-world commercial applications. For example, for a tool to recognize a cat, first, you will need to feed it with millions of cat photos so that it can learn. The algorithms will analyze the pictures for the distance between shapes, colors in the image, and how object borders meet each other. It will then understand what a “cat” is, rather than only seeing objects.

Once the computer has enough data, it can use the experience to untangle new images and find those that contain a cat. “Not Hotdog” works in a similar to this example. When the image reaches a minimum threshold to be understood as a hotdog or not, it declares a result.

Source: https://medium.com/@neha49712/computer-vision-the-most-demanding-area-64f23595a75d

The “Not Hotdog” and cat recognition examples are incredibly trivial, but there are industries where CV is making significant progress.

Data, data, data

With computer vision applications, the focus can often drift towards the user interface or end product. However, such systems are only valid if they have massive amounts of quality data feeding into them.

For example, imagine you want to develop a system that can recognize symptoms of disease using computer vision. Systems have much responsibility, with the lives of people at stake. For it to be efficient, there needs to be sufficient data from which to learn. Beyond that, it must be high-quality data.

High-quality data should be relevant, complete, and without bias. If we were building a tool to detect the early signs of cancer, loading the machine with pictures of heart conditions would not serve the correct purpose. A computer needs to have all the necessary elements to make a reasonable decision.

The process will typically start with skilled annotators who label the images, to train the models efficiently. The annotations need to go through extensive testing against a large number of example cases before going into deployment.

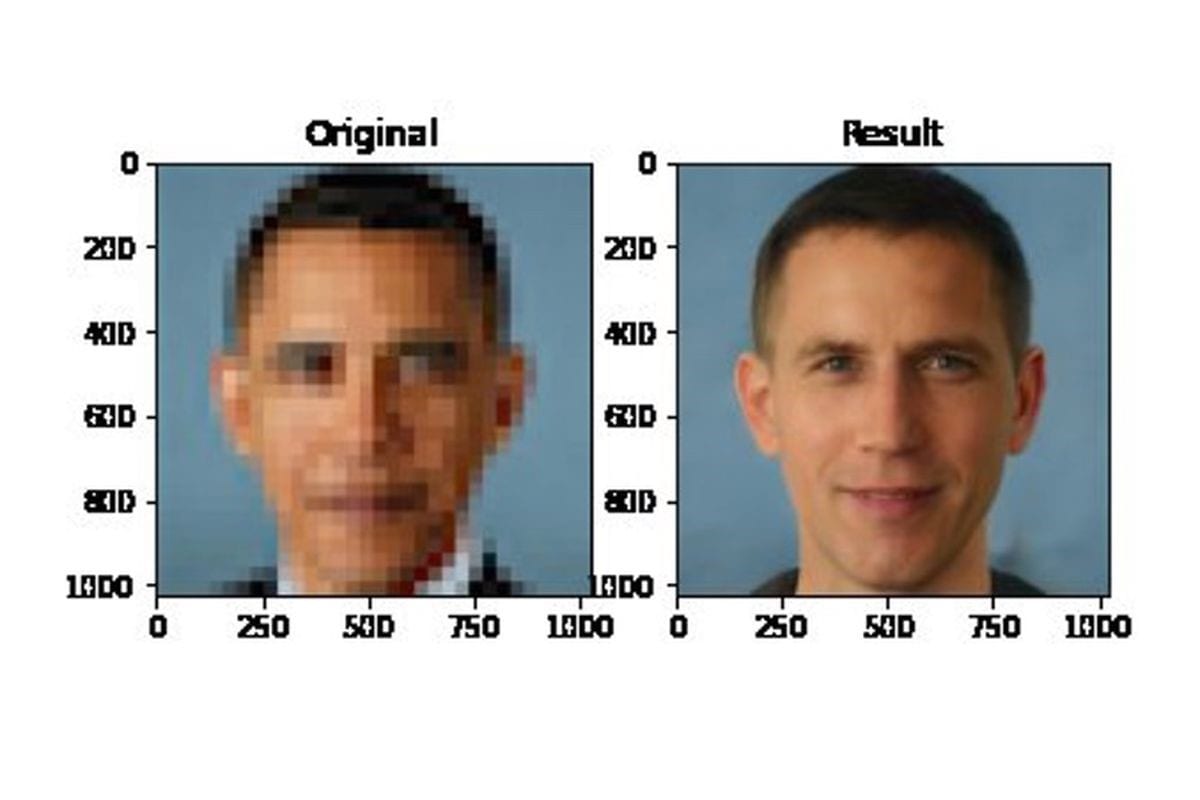

In a recent case, a computer vision platform was shown to be biased, recognizing a pixelated image of Barack Obama as a white Caucasian man.

Any testing should look to eradicate this type of bias from the computer vision model. To achieve this takes diverse datasets, setting suitable goals, and having quality assurance checks in place. Companies such as Anolytics offer a service that provides high-quality training data for computer vision models. They are helping with annotations in sectors like healthcare, retail, and agriculture to ensure they get the optimal performance.

Use cases for Computer Vision

There are lots of sectors benefitting from computer vision technology, and not all of them are trying to establish if a photograph is or isn’t a hotdog. The examples below show the tremendous real-world advantages that companies are gaining from the field.

Computer Vision in Healthcare

The application of computer vision in healthcare is both ground-breaking and controversial. In short, AI vendors can build tools that provide a patient diagnosis in seconds, as well as being able to assist with complicated tasks like surgery and diagnostics. The significant advantage here is that doctors and nurses can spend time focusing on patient care, rather than reviewing notes and data or having to complete administration work.

Diagnostics

Computer vision can help lead to a more accurate diagnosis of life-threatening conditions. Moreover, it can catch symptoms early enough to offer the best form of treatment before it is too late. The US and Israeli-based company, MaxQ AI, have computer vision software that can identify anomalies in patient brain scans. In using the technology, professionals have time to work on the best course of treatment patients, rather than figuring out a complex diagnosis. Doctors will need to validate the decisions that machines make rather than allowing computers to replace them.

The issue that arises from this form of diagnosis is that of trust. For example, if a doctor gets a diagnosis or decision wrong, somebody is automatically accountable for the action. However, in the case of a machine, who is responsible and whom to reprimand if they make the wrong call about somebody’s health? MaxQ says that their AI is aimed explicitly at augmenting human decision-making as opposed to replacing it as a response to any ethical dilemma.

Medical Imaging

Arterys has computer vision technology that can create 3D models of a patient’s heart on a radiologist’s computer screen. The software aims to reduce the time a radiologist spends scanning patients. Just like the solution for diagnostics, the clinician can focus their time on caring for the patients rather than doing administrative or repetitive tasks. Without technical training, the software can produce a 3D image of the heart within just 10 minutes, all through the training data that feeds it.

Arterys works by feeding in millions of images of the heart and its surrounding area. Quality training data ensures very accurate results.

Retail

Facebook is not the only one of the big technology companies investing in computer vision. Amazon is bringing facial recognition tools to the retail market with its Amazon Go stores. The outlets recognize people as they enter using computer vision-driven sensors. As they go through the stores, more sensors can see what people add and remove from their basket. Each time they do so, it can automatically charge or refund them for an item via their Amazon account.

When a shopper wants to leave, they can just walk out without worrying about cash or card. Everything automates through the Amazon account of the shopper. Trials of the stores were generally successful, but there are flaws around the fuzzy recognition of items, such as when the view of a product is not 100% clear.

If Amazon can iron out minor glitches, there is an opportunity for computer vision stores to proliferate the high street and rejuvenate offline sales.

Computer Vision in Agriculture

Agriculture is not always an industry that jumps to mind when thinking about disruption through technology. The primary use case in the sector for technology is to optimize operational efficiencies.

For example, on a traditional farm, a farmer will manually review their crops and look to see if there are any threats to the yield. Instead of that, they can invest in drone technology. Drones carry sensors that can scan the land and takes pictures from an aerial view. Computer vision can work in real-time to browse the images and look for common issues like an infestation or slow growth.

Rather than a farmer taking days to review a crop, drones can report back in minutes when coupled with computer vision software. Millions of images will load into the software that represents what both good and bad growth looks like. The photos are labeled so that the models can map new pictures to the most appropriate representation of what it sees.

In using computer vision, farmers can save hours of their own resource time.

Computer Vision in financial services

Banks have been turning to AI applications over the last few years to analyze data better and make decisions about potential risks. Computer vision is relatively new to banking but comes with many opportunities.

For example, in 2017, Wells Fargo announced that 13,000 ATMs would work without debit cards. Instead of using physical technology, users can operate ATMs via a code sent to their smartphone. Apple, Google, and Samsung all have their payment systems, which can get rid of physical cards as we look towards a fully digital society.

Biometric data such as an iris scan can verify customers and authorize transactions. Many customers are already using fingerprint technology via their smartphone to log in to banking applications. Both of these are examples of computer vision in the commercial finance world.

Elsewhere in financial services, insurance companies could use computer vision to settle claims immediately. Instead of a lengthy form filling process, an image showing car or property damage can be instantly matched to a policy, aligning the terms and authorizing payments.

Computer vision in education

In education, teachers can observe a class without interrupting activities. For example, low-cost cameras, tablets, and mobiles using computer vision can review how students study and react to different materials. In a non-intrusive way, the teacher can spot behavioral changes in tutors and adapt the learning methods appropriately. Technology allows teachers to focus on what they teach rather than trying to split their time between many students.

Characteristics such as eye movements, posture, and interactions can help teachers arrange courses for the personality types of individual students. When it comes to group projects, they can ensure that there is a healthy mix of people to help get the best results.

The future of computer vision

As the industry case studies in this article show, computer vision has the potential to change the way several sectors and companies operate. It is fair to say that some of those applications are nothing short of ground-breaking, where they may even prevent the onset of previously incurable illnesses.

It is fair to say that the public is still coming to terms with trusting machines over humans. The issue is most prevalent in healthcare or legal industries where the machine will have a direct impact on their lives. However, as more companies test and learn with the technology, they will be able to take that experience and develop firm ethical procedures and policies.

As of now, there are not any genuine use cases where computer vision is ready to replace human counterparts completely. While it can certainly do a great job of augmenting human work, any company willing to take the plunge on machines doing the work in totality would be taking a considerable risk.

In the future, as algorithms improve and technology like 5G, edge, and cloud computing continue to develop, more applications will eradicate the need for human intervention. That time is possibly still many years away, but every year and development moves the world a step closer.