Addressing Bias in Artificial Intelligence

By: Ciopages Staff Writer

Updated on: Feb 25, 2023

Addressing Bias in Artificial Intelligence is a non-trivial matter. Human biases exist in many aspects of life. There are times when personal beliefs stand in the way of making a rational decision, or perhaps information is framed in such a context that it leads to a biased result. Bias can impact the best candidate getting a job, making the right investment decision, or even how we name our children.

As artificial intelligence (AI) platforms are growing at an accelerated rate in many industries to make crucial decisions, there is a risk of biases creeping into their framework. If bias occurs in human behavior, any AI platform using that data has the potential to include the same prejudice and create flawed technology. For example, if a company made up of 95% male employees, decided to use AI to help with hiring decisions, the machine would assume that being male was a desirable attribute.

Addressing Bias in Artificial Intelligence

In this article, we will look at bias in AI and how companies can strive to mitigate it when deploying their solutions.

How AI becomes biased

As much as AI systems can reduce human bias in decision making, they can make the situation far worse. In one study, a criminal justice system labeled all African-American defendants as high-risk at twice the rate of white defendants. This is a case where the data fuelling the algorithms is filled so much with societal stereotypes; in this case, race, that it leads to poor decisions.

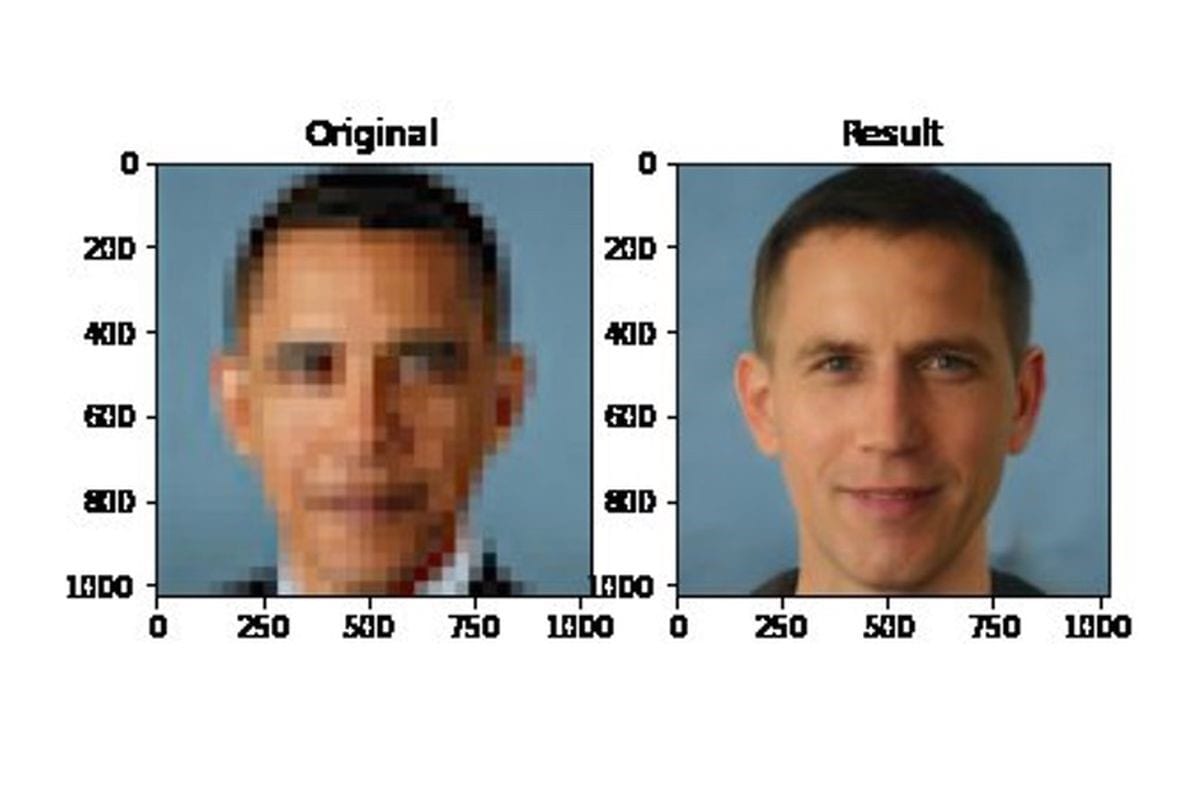

The example below from June 2020 shows AI bias in action. In this instance, a blurred image of Barack Obama translates as a White Caucasian man by the AI algorithm.

Source: Verge

The training data led to the bias in identifying the image after conversion.

AI platforms learn to make their decisions based on training data. The cleanliness and quality of training data have a direct impact on the resulting algorithm and AI application. The amount of data needed will depend on the complexity of your project. In the criminal justice case presented above, it might have been wise to include data from several jurisdictions, creating a more appropriate view of society.

Under-represented sample groups are another common form of bias that creeps into AI. For example, imagine you are a company that operates across the whole of the United States. In a dataset of 1 million rows, you have 50 rows from customers who live in Delaware. The Delaware group are highly under-represented. If you proceed to make recommendations and decisions using the data and extend that to the country as a whole, the Delaware group will be unfairly dismissed by the algorithm.

In a real-world example, facial analysis technology has found higher error rates amongst ethnic minorities, caused by under-representation in training data.

AI can solve the problem of human bias

Although AI can show its own bias, at the same time, it can solve the problems associated with bias in human beings.

Firstly, AI tells us things in black and white. There are no lies with the reason behind a decision being led by the rules set out by an algorithm. Humans can let their subconscious mind take over. For example, let’s say somebody called Emma applied for a job at a company. The previous person in that job role was also called Emma and lost the business a substantial amount of money. Although the hiring manager won’t state their name as a reason for ruling them out of the role, it will have added a degree of bias to the choice.

AI doesn’t have the “view of the world” bias that exists in human beings and won’t judge a person on their name.

In a situation where an AI-based decision appears biased, it is easier to interrogate and find the reason why. The result of a Netflix recommendation, for example, is based on a set of rules, driven by user data. If the rules appear to be displaying biased results, while complicated, the machine learning model can be investigating to find out why. A similar approach cannot be taken with the human brain, which is more of a “black box” than any machine out there.

In 2018, Netflix was accused of being creepy and racist by its viewers. The streaming giant presented images of black actors and actresses in the on-screen thumbnails to black viewers. The problem wasn’t with Netflix being racist, but the algorithm had used data to ascertain that the viewer would engage most with those images.

Netflix was able to quickly understand the problem by knowing what data powered the decision.

Some recent AI research papers have shown how it can improve fairness in justice systems, reducing the racial disparities that we spoke about earlier in this article.

Addressing Bias in Artificial Intelligence Platforms

The subjective nature of AI certainly has the potential to negate the element of human bias that we see in every walk of life. However, that’s only if we can remove the bias from the AI system itself. It might be impossible to remove bias entirely, but there are certainly ways to reduce it from AI platforms drastically.

Diversity can help in addressing Bias in Artificial Intelligence

Having a diverse workforce can have a knock-on effect in removing unwanted bias from AI. When teams are creating AI solutions, if they are all part of the same demographic, the likelihood is that it will be tailored to their needs. If businesses can ensure they employ teams from mixed demographics and backgrounds to project teams, it will go some way to resolving the issue of under-representation.

A similar philosophy needs to be taken with training data. It is imperative to get data from several sources to ensure it is varied enough to avoid possible bias. Diverse teams will spot bias in testing before any unwanted behaviors are put into production.

Data Science Toolkits

There are several open-source data science frameworks available for scientists to use, designed to de-bias AI systems. IBM has released a suite of tools in their AI Fairness project, which aims to balance data within machine learning models. By alerting users to the threat of bias, they can try different techniques and frameworks before deploying a solution.

Improving processes

If data bias is common within the business, it is essential to consider the processes that exist at the root cause. Rather than spending time cleansing and formatting data, there could be flaws in how it is collected, the design of a website, or employee behaviors. For example, is the company retail website designed in a way that is appealing to all age demographics? A website with photos of teenagers is unlikely to capture the over 60s market (if that is a target).

Working together

Finding and resolving bias in AI is about humans and machines working together. Some of the best models involve a “human-in-the-loop” method. With this technique, the AI system makes recommendations, but it needs to be verified by a human before it learns from that experience. Confidence is gained in the algorithm as humans can understand it.

Addressing Bias in Artificial Intelligence is not easy but can be done.

AI can undoubtedly help solve the problem of human bias in decision making. Rule-based models can break down the societal challenges that are innate with human behavior, creating ethical and economic benefits.

However, all of this is only possible if businesses invest in researching bias, continue to advance the field, and diversify their processes. AI cannot do this alone and needs humans in the loop to augment the bias process. In doing so, the confidence and trust that AI systems will accelerate and give us a more automated future.

You may also be interested in:

Artificial Intelligence as a Business Technology